Advanced Chip Packaging Satisfies Smartphone Needs

Clever chip packaging means mobile devices can be

smaller and smarter

By

Pushkar Apte, W. R. Bottoms, William Chen, George Scalise

Posted 28

Feb 2011 | 16:20 GMT

Illustration: Harry Campbell

We rely on our mobile devices for

an almost comically long list of functions: talking, texting, Web surfing,

navigating, listening to music, taking photos, watching and making videos.

Already, smartphones monitor blood pressure, pulse rate, and oxygen

concentration, and before long, they'll be measuring and reporting

air-pollutant concentrations and checking whether food is safe to eat.

And yet we don't want bigger devices or decreased battery life;

the latest Android phones, with their vivid 4.3-inch screens, are already

stretching the definition of pocket size,

to say nothing of the pockets themselves. The upshot is that the electronics

inside the devices have to do more, but without getting any larger, using more

power, or costing more.

Transistor density on state-of-the-art chips continues to

double at regular intervals, in keeping with the semiconductor industry's

decades-old defining paradigm, Moore's

Law. Today there are chips with billions of

transistors at a price per chip that has headed steadily down for decades.

Innovations that pack more and more circuits onto a chip will indeed continue,

as will the more recent trend of putting very different functions on a single

chip—for example, a microprocessor with an RF signal generator.

If we want to teach our smartphones new tricks, however, we'll

have to do more than equip them with denser chips. What we will need more than

ever are breakthroughs in an area not previously considered a major hub of

innovation: the packaging of those chips. Packaging refers loosely to the

conductors and other structures that interconnect the circuits, feed them with

electric power, discharge their heat, and protect them from damage when

dropped or otherwise jarred. But today, the drive to pack more functions into

a small space and reduce their power requirements demands that chip packages

do much more than they ever have before.

A packaged chip is a

sort of puzzle, with certain fixed and well-defined pieces. Before we talk

about how packaging designers are putting those pieces together in new ways,

it will help to review the standard ones.

The astoundingly complex manufacturing process that leads to a

chip starts with a wafer, a dinner-plate-size circle of a semiconductor

material, typically silicon. Manufacturers etch, print, implant, and perform

all sorts of other operations to turn a blank wafer into a grid of rectangles,

each about the size of a fingernail and mind-bogglingly dense with transistors

and interconnections. Sliced apart, those individual rectangles are what

specialists call die. Properly packaged, each die becomes a chip. These days,

many people use the termschip and die interchangeably, but traditionally,

the word die referred to a

naked integrated circuit without packaging. We'll stick to that traditional

terminology here so that we can succinctly make it clear whether we mean a

packaged chip or an unpackaged die.

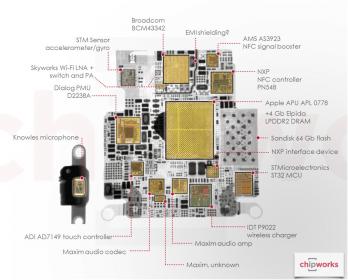

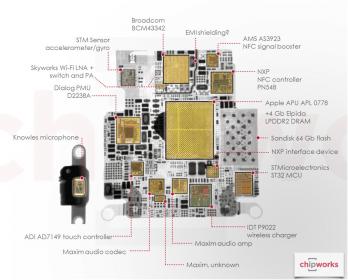

Inside your smartphone, you don't see naked die, of course. You

see little plastic slabs of varying sizes, with scores of tiny metal prongs

sticking out like insect legs, soldered onto a circuit board. The plastic

slabs are the exterior of the packages. The fragile die are inside them,

protected from damage during manufacture or use and connected to other chips

through those prongs and the traces on the circuit boards.

These circuit boards are critical, of course, to any electronic

system, but they don't actually occupy all that much space inside those

systems. In fact, if you open up a smartphone today, you'll find that the

amount of space allocated to electronics is rather small, so efficient use of

that space is key.

Starting in the mid-1970s, designers trying to pack more

functionality into a small space created systems on chips. What that means is

that they designed digital and analog circuitry, memory, logic, communication,

and power elements that were manufactured by a single process on a single die.

This integration wasn't easy, because the processes, materials, and

technologies optimal for each of these functions tend to be very different.

For example, a communication or analog chip might ideally use gallium arsenide

as the substrate. It might be built in 180-nanometer technology, which

basically means that the smallest features of the devices on that chip measure

roughly 180 nm across. A digital processor chip, on the other hand, would use

a silicon substrate with 32-nm technology. Power and noise considerations also

vary tremendously; the analog chip might require a much higher voltage, and

noise from the digital circuitry could interfere significantly with the

performance of the analog sections.

The upshot is that integration of all those functions onto a

single die requires compromises in every circuit type in order to use the same

process and material, thus lowering performance and increasing power

consumption. A process that works for multiple types of functions is optimal

for none.

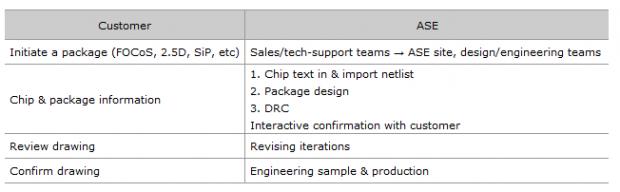

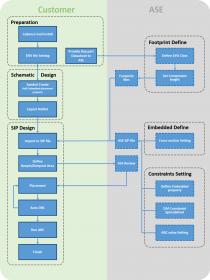

Designers have many methods of creating a

system-in-a-package(SiP).

So why bother to cram all those things onto one die? The main

advantage is proximity, which eliminates the signal-propagation delays that

can degrade performance. However, that advantage is often negated by other

factors: Incredibly long and complex combinations of processes often increase

costs and power consumption, while decreasing performance and yields. These

trade-offs make combining disparate functions on a single chip economically

unfeasible in many cases. Another barrier to this kind of integration is that

hardly any companies have the necessary expertise to make every single type of

circuitry needed in such a highly variegated die.

So, starting about a decade ago, designers began taking

another approach—thesystem-in-a-package

(SiP).

An SiP is a combination of integrated circuits, transistors, and

other components (like resistors and capacitors) on two or more die installed

within a single package. A graphics processor is a good example. Along with

the processing circuitry, it has memory—both dynamic RAM and flash—as well as

passive components like resistors and capacitors sitting on top of a single

miniature circuit board, and the whole pile goes inside one package. With

smart design integration, an SiP may contain multiple and radically different

functions—incorporating, for example, microelectromechanical systems, optical

components, sensors, biochemical elements, or other devices within that

package. It can even contain multiple system-on-a-chip units that combine some

of these functions.

Basically, SiP lets designers mix and match components to get

higher performance and get their product to market quickly while spending less

on R&D, because they're using existing components. They don't have to go

through a long and expensive design cycle every time they need to add a

function; they can simply change part of the collection of die within the

package.

The SiP approach can also enable smaller products. We all

remember the bulky, single-function video cameras that tourists lugged around

years ago. As those cameras got smaller, the sizes of some components—the

battery, the lens, and the LCD display, for example—didn't really change much;

people want big displays and lots of power. And the size of a lens is set by

its aperture, image sensor, and focal length. So the burden of miniaturization

falls on the electronics: When a device shrinks to 66 percent, for example

(from 450 cubic centimeters in 2006 to 300 cm3 today), the electronics must

shrink to a third or less of their original size.

SiP technology brings another benefit. Data paths between the

processor chip and the memory chip are shorter in comparison with those on a

circuit board, so data flow is faster and noise is reduced. With less distance

to travel, it takes less power to get there—another plus. This reduction in

size and increase in performance are the driving forces behind the continued

evolution of SiP architectures.

There's more than one

way to build a system-in-a-package. One of them is called package-on-package

(PoP). Remember that circuit board crammed with chips? It looks a little like

a suburban office park seen from the air. Well, what better way to cram in

more office space than to swap out some one-story buildings for multistory

replacements? That's what package-on-package designers are doing. They pack a

lot of circuitry into a small volume by stacking one set of connected die on

top of another set—flash and DRAM components, for example, on top of an

application-specific IC—and then putting them inside a single package so that

product designers and manufacturers can deal with them as single units. The

sets stack like Lego blocks, typically with logic on the bottom and memory on

top. Such structures are adaptable—manufacturers, when necessary, can vary the

memory density by swapping out the piece of the stack that holds the memory

components, for example. And each of the sets within the package can be tested

individually before stacking. After stacking, however, testing becomes more

difficult. And manufacturers will worry about possible warping of the

miniature circuit boards and die, which would reduce the yield during

assembly.

So PoP systems are a little pricey and therefore used only for

products whose prices can include a premium for better performance in a

smaller, low-power package. Manufacturers of high-end networking products were

early adopters of this approach; manufacturers of digital still cameras and

cellphones have since joined them. Smartphones and, more recently, tablet

computers are using PoPs mainly to integrate application-specific ICs with

memory. PoP continues to evolve and will likely migrate into other products

further down the consumer-electronics food chain.

Package-in-package (PiP)

is another variant of SiP. Instead of just naked die and other components

piled onto miniature circuit boards inside a single package, PiP adds packaged

die—in other words, chips—into the mix. So PiP puts chips within chips.

Semiconductor companies choose this option for business reasons as much as for

technical ones—it forces product manufacturers to buy multiple subsystems from

the same chip manufacturer. PiP integrates more functions and can improve

performance beyond that of PoP systems, but it is less flexible in combining

different devices, like memory chips, from different suppliers. It's also hard

to test. In some mobile applications—for example, the most advanced

smartphones—manufacturers gladly accept these drawbacks because PiP designs

can cram even more into a smaller space. But they haven't caught on as widely

as the PoP approach.

In all these packaging schemes, the most important consideration

is the electrical connections between the multiple die and the miniature

circuit boards that link them. The traditional and cheapest technology used

for these connections is wire bonding, which is in about 80 percent of the

packages produced today. Wires connect terminals on an individual chip to the

little circuit board inside the package. Then electrical paths on that circuit

board route signals among chips and to the leads that extend from the package,

enabling it to be connected to other devices within a system.

Despite repeated predictions that wire-bond technology has

reached its practical physical limit, it continues to reinvent itself: In the

past few years, manufacturers reduced the wire diameter to 15 micrometers to

enable them to cram more wire terminals onto the precious real estate of a

chip's surface. They also began changing the wire materials from gold to

copper, in response to the skyrocketing cost of gold.

In a conventional wire-bond connection between two chips, the

electrical path runs from the closely spaced terminals at the edge of the chip

to terminals on the substrate. As the chip shrinks, so does the distance

between the individual terminals, and it becomes tricky for designers to avoid

short circuits and to keep the wires far enough apart to minimize cross talk.

Photo: ASE Group

TIES THAT BIND: Fanning

wires out from all sides of a chip and making those wires thinner gives

designers more electrical paths to choose from.

Nevertheless, many innovations are extending the life of this

technology. Some manufacturers, for example, are replacing single rows of

wires with multiple rows on the four edges of the chip to give designers more

options for electrical paths.

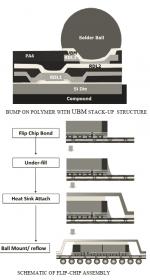

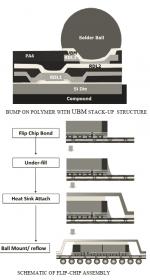

Alternatively, some designers have eliminated the wires

altogether and replaced them with "bumps" of solder, gold, or

copper. This approach earned the name flip-chip, because the side of the chip

with the bumps must be flipped face down to connect with the bumpy side of the

chip below or the underlying circuit board. As you can imagine, a small bump

of metal is smaller and shorter than a long wire and therefore can conduct a

signal much faster and at higher bandwidths. However, this advantage comes at

a cost—increasing the overall price of the package to 1.5 to 5 times that of a

wire-bond version. Not surprisingly, this technology has also gravitated

toward industries that need high performance and will pay for it. It is now

standard for high-speed and high-bandwidth microprocessors and graphics

processors because of its shorter delay time.

A newcomer to the package scene is the wafer-level chip-scale

package. This technology is essentially a package without a package—the naked

die has extremely tiny solder balls on its active side, allowing it to connect

directly to a circuit board. These die are fragile, so to date this process

can be used only for very tiny die, and even these typically need to be

further protected with a coating on one side. The vast majority of smartphone

manufacturers are beginning to embrace this approach.

Designers have found another way to make SiP devices as small as

possible—one that might seem obvious. They simply make the wafer

thinner—taking a wafer that is, say, a little over 700 µm thick and reducing

it to perhaps 100 or even 50 µm or less. Because the size of the wafer

eventually determines the size of the package, and therefore the size of that

device you're carrying in your pocket, that change can make a big impact.

Mechanical grinding is the most popular way to thin a wafer. It's

just what you'd expect: Manufacturers physically grind the wafer down,

typically by rolling it through a slurry of water and abrasive particles or

rubbing it with diamond particles embedded in a resin. There are lots of other

ways to thin a wafer, including chemical mechanical polishing, which smooths

surfaces with the combination of chemical and mechanical forces, and chemical

etching, which uses chemical liquids or vapors to remove some of the wafer

material.

With the trend toward smaller packages, manufacturers are making

die thinner than was ever thought possible. For example, one manufacturer

recently privately demonstrated a flash memory die 10 µm thick and a tiny RF

device measuring 50 by 50 by 5 µm.

SiPs are the best way

to pack very different functions into a single electronic device. In the

future, the individual pieces in an SiP could be as diverse as RF antennas,

photodiodes, and drug delivery tubes—perhaps even a protein layer that could

allow the chip to connect with human tissue.

But we're not quite there yet. Putting such complex

devices into a single package will require new materials and control of their

interactions on the nanometer scale—and perhaps even on the molecular scale.

It won't be easy. There will be tough competition as consumers demand smaller

and smaller devices that do more and more. Designers are now investigating

taking packageless packaging beyond simply attaching naked die to circuit

boards; they are beginning to attach naked die directly to each other in three

dimensions. Some manufacturers are already making simple versions of these3-D

modules, but this technology has a long way to

evolve before it can become a staple of the manufacture of high-volume

commercial products.

All these packaging innovations are remarkable, but the real

impact has to be measured by what they enable in the real world—and how they

will change society. Electronics are woven into the fabric of our lives and

are beginning to be woven, literally, into the clothes we wear. Increasingly,

they will be implanted in our bodies as well. Pacemakers, defibrillators, and

microfluidic pumps for drug delivery are in use; biosensors and other

implantable devices that can send data to external computers are on the way.

Devices that may allow control of epilepsy, Parkinson's disease, and migraines

are already in clinical trials. Future forms of packaging will not only have

to protect the electronics from the environment but also shield a sensitive

environment—the human body—from the electronics. These innovations will

improve our work, our health, our play, and even our longevity.

This article originally appeared

in print as "Good Things in Small Packages."

- By Dr. Dan Tracy, www.semi.org

- View Original

- November 3rd, 2015

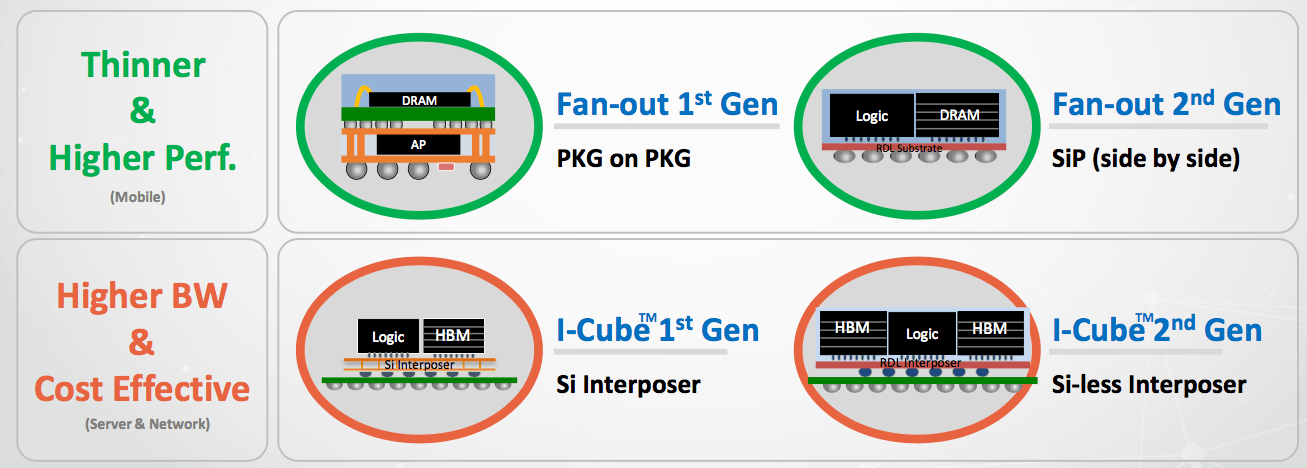

- New package form factors to satisfy high-performance, high-bandwidth, and low power consumption requirements in a thinner and smaller package.

- Packaging solutions to deliver systems-in-package capabilities while satisfying low-cost requirements.

- Shorter lifetimes and differing reliability requirements. For example, high-end smartphones and tablets, the key high reliability requirement is to pass the drop test; and packaging material solutions are essential to delivering such reliability.

- Shorter production ramp times to meet time-to-market demands of end product. This is becoming critical and causes redundancy in capacity to be required, capacity that is underutilized for part of the year

It’s All about Packaging. In this Material World That

We Are Dealing With, Who Is Your Partner?

With

the recent release of Apple’s 6s and the form factors of internet enabled

mobile devices and the emergence of the IoT (Internet of Things), advanced

packaging is clearly the enabling technology providing solutions for mobile

applications and for semiconductor devices fabricated at 16 nm and below

process nodes. These packages are forecasted to grow at a compound annual

growth rate (CAGR) of over 15% through 2019. In addition, the packaging

technologies have evolved and continue to evolve so to meet the growing

integration requirements needed in newer generations of mobile electronics.

Materials are a key enabler to increasing the functionality of thinner and

smaller package designs and for increasing the functionality of

system-in-package solutions.

Figure 1: Packaging Technology Evolution – Great Complexity in Smaller, Thinner Form

Factors, courtesy of TechSearch International, Inc.

The

observations related to mobile products include:

Packaging

must provide a low-cost solution and have an infrastructure in place to meet

steep ramps in electronic production. The move towards bumping and flip chip

has only accelerated with the growth in mobile electronics, though leadframe

and wirebond technologies remain as important low-cost alternatives for many

devices. Wafer bumping has been a major packaging market driver for over a

decade, and with the growth in mobile the move towards wafer bumping and flip

chip has only accelerated with finer pitch copper pillar bump technology

ramping up. Mobile also drives wafer-level packaging (WLP) and Fan-Out (FO)

WLP. New wafer level dielectric materials and substrate designs are required

for these emerging package form factors.

Going

forward, the wearable and IoT markets will have varying packaging requirements

depending on the application, the end use environment, and reliability needs.

Thin and small are a must though like other applications cost versus

performance will determine what package type is adopted for a given wearable

product, so once more leadframe and wirebonded packages could be the preferred

solution. And in many wearable applications, materials solutions must provide

a lightweight and flexible package.

Such

packaging solutions will remain the driver for materials consumption and new

materials development, and the outlook for these packages remain strong.

Materials will make possible even smaller and thinner packages with more

integration and functionality. Low cost substrates, matrix leadframe designs,

new underfill, and die attach materials are just some solutions to reduce

material usage and to improve manufacturing throughput and efficiencies.

SEMI

and TechSearch International are once again partnering to prepare a

comprehensive market analysis of how the current packaging technology trend

will impact the packaging manufacturing materials demand and market. The new

edition of “Global Semiconductor Packaging Materials Outlook” (GSPMO) report

is a detailed market research study in the industry that quantifies and

highlights opportunities in the packaging material market. This new SEMI

report is an essential business tool for anyone interested in the plastic

packaging materials arena. It will benefit readers to better understand the

latest industry and economic trends, the packaging material market size and

trend, and the respective market drivers in relation to a forecast out to

2019. For example, FO-WLP is a disruptive technology that impacts the

packaging materials segment and the GSPMO addresses this impact.

The new report will be published later in the fourth

quarter of 2015. For more information, download the 2013/2014

sample report and/or to preorder,

please contact SEMI customer service at 1.877.746.7788 (toll free in the U.S.)

or 1.408.943.6901 (International Callers). For further questions, please

contact SEMI Global

Customer Service 1.408.943-6901 or

email mktstats@semi.org.

Global Update

SEMI

- By Ed Sperling, semiengineering.com

- View Original

- September 28th, 2015

Is The Stacked Die Supply Chain Ready?

A

handful of big semiconductor companies began taking the wraps off 2.5D and

fan-out packaging plans in the past couple of weeks, setting the stage for the

first major shift away from Moore’s Law in 50 years.

Those moves coincide with reports of commercial 2.5D chips from chip assemblers and foundries that are

now under development. There have been indications for some time that this

trend is gathering steam. Equipment makers have been talking with analysts

about how advanced packaging will affect their growth plans. After almost a

year of delays, high-bandwidth memory was introduced into the market earlier

this year. And there have been announcements by foundries and OSATs that 2.5D chips are now in commercial production,

with many more on the way.

Still,

the process is far from smooth. It’s not that chips can’t be built using

interposers or microbumps or even bond wires to include more of what used to

be on a PCB in a single package. But in comparison to the supply chain for

planar CMOS, stacking die is a comparative newcomer. The tens of billions of

dollars spent on shrinking planar features dwarfs the amount that has been

spent on packaging multiple chips together, despite the fact that multi-chip

modules have been around since the 1990s. Foundry rules are still under

development. Some EDA tools and IP are available, but more still need to be

optimized for stacked die configurations. And experience in working with these

packaging approaches remains limited, even if they are gaining traction.

What’s ready

Nevertheless, chipmakers, IP vendors, packaging houses and foundries

are pitching a different story than they were at the beginning of the year.

Most now have some sort of advanced packaging strategy in place—a recognition of just how expensive it has become

to develop chips at 16/14nm, 10nm and 7nm, and how much business they’ll leave

on the table if they don’t recognize many chipmakers won’t go there.

Marvell, for example, has just begun rolling out what it calls a

“virtual SoC” 2.5D architecture called MoChi, with the first LEGO-like modules

to be added throughout the remainder of 2015 using internally developed

interconnect technology.

“The

problem is not just cost anymore,” said Michael Zimmerman, vice president and

general manager of Marvell’s connectivity, storage and infrastructure

business. “It’s the total development effort measured in dollars and years.

There are not many suppliers that can justify spending billions of dollars,

the time it takes to get these chips to market, and the resources required to

make that happen. The goal is to restore reasonable time-to-market by going in

a reverse direction. Instead of massive integration, you can break the chip

into parts and separate the problems into modules. That allows the pace of

innovation in each die to be separate from other die.”

He

noted that initially there was a lot of skepticism about the approach, but in

the past few months that skepticism has evaporated. “When you consider that

the interconnect is 8 gigabits per second for one serial connection, and you

can put 25 wires in a 1mm space, that means you can have up to 50 gigabits per

second die to die with latency of 8 nanoseconds.”

Similar

stories are being repeated more frequently across the industry. ASE Group has

been working with AMD since 2007 to bring 2.5D packaging to market.

“We

had a cost issue with the interposer,” said Michael Su, AMD Fellow in charge

of die stacking design and technology at AMD. “But we have managed to decrease

that to a better price point. Two years ago, the technology was still in the

development stage. Since then we’ve decreased the number of features, added

yield learning and now there are multiple players making interposers.”

The result is a graphics card for the gaming market that is 40%

shorter—small enough to fit on a

six-inch PCB—runs 20° C cooler at 75° instead of 90°, and which is 16 decibels

quieter. It also offers a 2X performance increase over previous versions based

on GDDR5 and twice the density, which allows system makers to turn up the

performance increases in other parts of the system without exceeding the power

budget. And with interposers now available commercially from most of the major

foundries, Su said the prices will continue to decline.

Put in perspective, though, this was not a trivial project. It took

eight years of ironing out the kinks and thousands of iterations of chips to

get to that point—and a huge

investment by both AMD and ASE.

“There

are 240,000 bumps that we needed to connect together,” said Calvin Cheung,

vice president of business development and engineering at ASE. “You have to

make sure every one of those is connected. We also had to select the right

materials and equipment, and to figure out how to pick the right piece of

equipment.”

Cheung

noted that a lot of the costs involved are proportional to volume, meaning

prices will drop once volume increases and yield, materials and architectural

design are mature enough. But he added that the value of integrating different

components on multiple die cannot be overstated, because it allows flexibility

to be able to target multiple market segments with minimal effort and time.

IBM Microelectronics has been working on this technology

for at least a decade, as well. Now part of GlobalFoundries, the combined company is shipping 2.5D and full 3D IC parts using through-silicon vias. Gary Patton, the

company’s CTO, is watching similar trends unfold. “We’re definitely seeing an

uptick in requests for quotes for 2.5D solutions,” he said. “As the volumes

increase, it helps drive the cost down. And then people see it’s shipping and

start to realize this is real and they can use it.”

The story is much the same at TSMC. “The vector continues for the high-performance camp,”

said Tom Quan, one of the foundry’s directors. “It offers better bandwidth,

and for the consumer market it can be done in high volume with low cost. Some

of this will use a silicon interposer. But even if you do away with the PCB

and put it all in a package, you get better results.”

TSMC’s

offerings in this area come in two flavors, a fanout technology it calls InFo

(integrated fanout) and a full 2.5D approach it calls CoWos (chips on wafer on

substrate). Quan said the advantage of CoWos is that it can integrate the

highest-performance die using the latest technology with analog sensors at

older technologies. “This is a big market. It includes IoT, automotive, and

high-performance computing. CoWos will address the high-performance needs,

InFo will address the other two.”

The

first version of TSMC’s plan is expected to roll out in 2016. There are a

couple of other iterations planned for InFo, including through-mold vias and

through-InFo vias.

View from the trenches

This

all sounds like the road to stacked die is fully paved, but companies involved

in developing these chips are finding not everything is so perfect yet.

“If you look at planar silicon, from GDSII to the mask

shop, there are well-defined specs,” said Mike Gianfagna, vice president of

marketing ateSilicon. “If you pass the requirements, which are standard,

then the downstream supplier can make the chip. That’s still missing in 2.5D.

If you have warping problems, contact problems or yield issues, you don’t know

that up front. And if the chip fails, you have to sign waivers that it’s your

risk, not someone else’s.”

Gianfagna

said what’s particularly troublesome is testing of the interposer. “We don’t

have rules for that. It’s good enough to create a design, and you can build it

into the cost of designs, but we’re still one to two years away from getting

the benefit of yield learning and analysis so you can get chips out that are

cheaper, more efficient and more reliable. This is still a big step forward,

though. In the past we weren’t sure whether we could build it or that it would

yield. We’re now beyond that, and a growing number of companies want to be out

in front with this.”

The

first companies to fully embrace these issues were DRAM manufacturers, which

have been combining memory modules vertically to save space and reduce the

distance signals need to travel. The Hybrid Memory Cube (HMC) and

high-bandwidth memory (HBM) are both now fully tested and in commercial use.

“By increasing the density you get more performance,

compared with more DIMM slots, which makes your system performance go down,”

said Lou Ternullo, product marketing director at Cadence. “Customers are all asking for 3D support because they

want to be ready.”

The

big difference between the HMC and HBM is the interface. HBM uses microbumps,

which means that today the only way to connect that to logic is through an

interposer. So far there has not been much adoption outside of the graphics

market, but Ternullo said that by the end of the year there should be about a

half-dozen chips using HBM.

What

changes in 2.5D and 3D is that the manufacturers take on more of the ecosystem

role to overcome the known good die issues. Several sources say this is

particularly important for HBM, because unlike DRAM it cannot be put through

temperature cycles for testing. It has to be tested through the interface once

the 2.5D package is completed, and the only way to do that is with built-in

self-test (BiST).

Planning for 2.5D

Some

of the big changes involve mindset, as well. Just as power and security need

to be part of the up-front architecture of any chip these days, compared with

earlier generations where they were an afterthought, so do things like how

engineering teams are going to test the components in a 2.5D configuration,

understanding how certain IP will yield compared with other IP, and

understanding the interactions of analog and digital chips even if they aren’t

on the same die.

“Design for test is one of the critical areas that needs

to be considered,” said Asim Salim, vice president of manufacturing operations

at Open-Silicon. “Proving microbumps has been a challenge for us. We

now have some solutions. But two to three years ago we had to educate people

that this is even needed.”

Integrating

analog is another issue, and it varies greatly from one package to the next.

Salim said that if an A-to-D converter is used to connect to other modules,

for example, it will require a different kind of testing than if it’s

connected to the ball grid array on the package. The first requires a power-on

self-test, while the latter can use external test. Testability is one of the

key areas, and getting it wrong can both increase the cost of the design and

decrease its reliability.

Another

area that needs to be considered up front is I/O coherency and what can be

done with new architectural approaches. What’s possible with multiple die is

more than what’s possible on a single die. “You can make two die behave like

one die,” said Marvell’s Zimmerman. “You also can connect multiple cores on

different die and turn it into many cores on different die.”

Full

3D-IC architectures are still expected to take at least a couple more years

before they are commercially in use, according to a number of companies. But

work has begun there, as well, say a number of industry sources. That problem

is even tougher to solve, but compared with 5nm and 3nm, it may be a toss-up

as to which approach is more difficult.

Conclusion

As

with many developments in the semiconductor industry, when the entire supply

chain begins turning direction the pieces line up quickly.

“We are really in the middle of the shift to 2.5D,” said

Wally Rhines, chairman and CEO of Mentor Graphics. “That will drive tools to do the integration of the

chip and the package.”

It

also will drive new opportunities for companies that have bet on this

technology as it matures and becomes much more flexible, with innovations

occurring at the module level rather than across an entire chip.

- By Ed Sperling, semiengineering.com

- View Original

- August 13th, 2015

2.5D Creeps Into SoC Designs

A

decade ago top chipmakers predicted that the next frontier for SoC

architectures would be the z axis, adding a third dimension to improve

throughput and performance, reduce congestion around memories, and reduce the

amount of energy needed to drive signals.

The

obvious market for this was applications processors for mobile devices, and

the first companies to jump on the stacked die bandwagon were big companies

developing test chips for high-volume devices. And that was about the last

serious statement of direction that anyone heard involving stacked die for a

few years.

What’s becoming apparent to chipmakers these days is that

stacked isn’t fading away, but it is changing. TSMC and Samsung reportedly are moving forward on both 2.5D and 3D IC, according to multiple industry sources, and GlobalFoundries continues its work in this area—a direction that will get a big

boost with the acquisition of IBM’s semiconductor unit.

“It’s still very definitely an extreme sport for some of

our customers,” said Jem Davies, ARM fellow and vice president of technology. “It looks

really exciting and it’s possibly the case that somebody who gets this right

is going to make some significant leaps. There are some physical rules in

this, though. If you can reduce the amount of power dissipated between a

device and memory, or if you’ve got multiple chips, the amount they dissipate

talking between them is a lot. If you’ve got a chip and memory, the closer you

can get these things to each other, the faster they’ll go and the less power

they will use. The Holy Grail here is in sight. There are a number of

technologies that we’re seeing people looking at using.”

Full

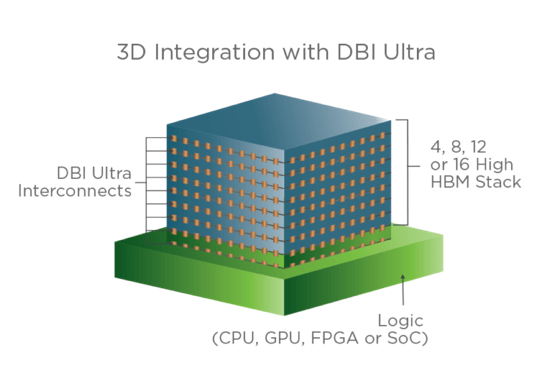

3D stacking with through-silicon vias has gained some ground in the memory

space, notably with backing from Micron and Samsung for the Hybrid Memory

Cube. But the real growth these days is coming in 2.5D configurations using a

“die on silicon interposer” approach, which leverages the interposer as the

substrate on which other components are added, and to a lesser extent

interposers connecting heterogeneous and homogeneous dies. Typically these

packages are being developed in lots of less than 10,000 units, but there are

enough of designs being turned into production chips that questions about

whether this approach will survive are becoming moot.

“People

are becoming a lot more comfortable with this technology,” said Robert Patti,

CTO at Tezzaron. “Silicon interposers are much more readily available. You can

get them from foundries. We build them. And there are some factories now being

built in China to manufacture them.”

Relative costs

One

of the key factors in making this packaging approach attractive is the price

reduction of the interposer technology. Initial quotes several years ago from

leading foundries were in the $1 range for small interposers. The price has

dropped to 1 to 2 cents per square millimeter for interposer die.

“This

is now PCB-equivalent pricing,” said Patti. “This used to be only for

mil/aero, but we’re seeing these in more moderate numbers. They’re being

manufactured in batches of hundreds to thousands to tens of thousands. We’ve

had people look at this for high-end disk drives. The low-hanging fruit in

this area is high-bandwidth memories with logic. The focus is on high

bandwidth, and power comes along for the ride.”

What’s less clear is whether interposers, such as Intel‘s Embedded Multi-die Interconnect Bridge, which is

available to the company’s 14nm foundry customers, and organic interposers,

which are more flexible but at this point more expensive, will be price

competitive with the silicon interposer approaches.

But cost is a relative term here, and certainly not

confined just to the cost of the interposer. The semiconductor industry tends

to focus on price changes in very narrow segments, such as photomasks, while

ignoring total cost of design through manufacturing. That’s true even

for finFETs, where the focus has been on reduced leakage current

rather than big shifts in thermal behavior, particularly at 10nm and beyond.

HiSilicon Technologies, which designs production 2.5D

chips for Huawei, submitted a technical paper to the IEEE International

Reliability Physics Symposium in April that focuses on localized thermal

effects (LTE), which can affect everything from electromigration to chip aging. The paper identifies thermal

trapping behavior as one of the big problems with finFETs at advanced nodes,

saying that the average temperature of finFET circuits is lower due to less

leakage, but “temperature variation is much larger and some local hot spots

may experience very high self-heating.”

Planning for LTE isn’t always straightforward. It can be affected by

which functions are on—essentially

who’s using a device and what they’re doing with it—and how functions are laid

out on the silicon. And it can be made worse by full 3D packaging, because

thermal hot spots may shift depending upon what’s turned on, what’s dark, and

how conductive certain parts of the chip are.

“The problem there is thermal hot spot migration,” said

Norman Chang, vice president and senior product strategist at Ansys. “At a 3D conference one company showed off a DRAM stack on an SoC. The hotspot was in the center of the chip when it was

planar, but once the DRAM was added on top, the hot spot moved to the upper

right corner. So the big issue there is how you control thermal migration.”

Chang

noted that that 2.5D is comparable to the thermal gradients in planar

architectures, where keeping 75% of the silicon dark at any single time for

power constraints generally keeps it cool enough to avoid problems.

Time to market

Cost is a consideration in the time it takes to design and

manufacture stacked die, as well. One of the initial promises of stacked die—particularly 2.5D—was that time to market would

be quicker than a planar SoC because not everything has to be developed at the

same process node. That hasn’t proven to be the case.

“The turnaround time is longer,” said Brandon Wang,

engineering group director at Cadence. “If you open a cell phone today and look at what’s

inside, there are chips all over the board. That requires glue logic, so what

happens is that with the next generation companies look at how much they can

get rid of. With a silicon interposer you can fit chips into a socket easier.

It takes longer, but it helps people win sockets.”

One

thing that has helped is that the heterogeneous 2.5D market is mature enough

for design teams have some history about what works and what doesn’t. Over

time, engineers get more comfortable with the design approach and it tends to

speed up. That same trend is observable with double patterning and finFETs,

where the initial implementations were much more time consuming than the

current batch of designs. Whether it will ever be faster than

pre-characterized IP on planar chips is a matter of debate. Still, at least

the gap is shrinking.

But

there also are some distinct advantages on the layout side, particularly for

networking and datacom applications. While designs may not be quicker at this

point, they are cleaner.

“Complex

timing sequences and cross-point control are where the real benefits of 2.5D

show up,” said Wang. “Cross point is a signal that crosses I/O points, and the

tough thing about cross point is data congestion. By going vertical you

provide another dimension for a crossover bridge.”

Testing

of 2.5D packages has been proved to be straightforward, as well. Full 3D

logic-on-logic testing has required a highly convoluted testing strategy,

which was outlined in the past by Imec. And more recently, the push for memory

stacks on logic has resulted in other approaches. But with 2.5D it has been a

matter of tweaking existing tools to deal with the interposer layer.

“You can still do a quick I/O scan chain and run tests

in parallel, so there is not a large test time,” said Steven Pateras, product

marketing director for test at Mentor Graphics. “You can access the die more easily. The only

complication is with the interconnect, and that’s pretty much the same as an

MCM (multi-chip module). That’s well understood.”

Reality check

While

stacked die pushes slowly into the mainstream, there are a number of other

technologies around the edges that could either improve its adoption or slow

it down. Fully depleted SOI is one such technology, particularly at 22nm and

below, where performance is significantly faster than at 28nm and where

operating voltage can be dropped below the voltage in 16/14nm finFETs.

CEA-Leti, for one, has bet heavily on three technology areas: FD-SOI,

2.5D, and monolithic 3D, according to Leti CEO Marie-Noëlle Semeria. “We see

the market going in two ways. One will be data storage, servers and consumer,

which will need high performance. That will be a requirement (and the

opportunity for 2.5D and 3D). Another market is the broad market for IoT,

which still has to be better defined. That will include the automotive and

self-driving market, medical devices and wearables. For that you need technology

with very good performance, low power and low cost. FD-SOI can answer this

market.”

Others

are convinced that stacked die, and in particular 2.5D, can move further

downstream as costs drop and more companies are comfortable working with the

technology.

“Right now, 2.5D is at the server and data center level,

and it will certainly be in more servers as time goes on,” said Ely Tsern,

vice president of the memory products group at Rambus. “But we also see it going forward as manufacturing

costs drop and yield increases.”

That’s

certainly evident at the EDA tool level, where companies are doing far more

architectural exploration than in the past. But whether that means more 2.5D

or 3D designs, and how quickly that shift happens, is anyone’s guess.

“Right now there is interest in exploring multiple

architectures that could change overall designs,” said Anand Iyer, director of

marketing for the low power platform at Calypto. “The big question people are asking is how you save

power and keep the same performance level. 2.5D is one way to reduce power,

and it’s one that many people are comfortable with. MCMs existed before this

and people are quite familiar with them. The new requirement we’re seeing is

how to simulate peak power more accurately. There are more problems introduced

if power integrity is not good.”

Iyer

noted that in previous generations, I/O tended to isolate the power. At

advanced nodes and with more communication to more devices, power integrity

has become a challenge. 2.5D is one way of helping to minimize that impact,

but it’s not the only way.

- 4 min read

- original

2.5D Timetable Coming Into Focus

After years of

empty promises, the timetable for 2.5D [KC]is

coming into better focus. Large and midsize chipmakers are behind it, real

silicon is being developed, and contracts are being signed.

That doesn’t mean

all of the pieces are in place or that market uptake is at the neck of the

hockey stick. And it certainly doesn’t mean the semiconductor industry is

going to abandon development at the most advanced process nodes, or even

improvements at older nodes that could slow migration in all directions.

“Without a doubt

not everything needs integration [on a single die], said Joe Sawicki, vice

president and general manager of the Design-To-Silicon Division at Mentor

Graphics []. “You’re not going to be looking for 6 billion

transistors in a wearable device or even a fully integrated factory. But at

the same time, the number of customers doing 20nm designs is a huge number of

companies.”

Although

the benefits are well known, 2.5D remains a new packaging approach with

different interconnects and new memory structures. There are still kinks to

iron out of the packaging process, work to be done across the supply chain,

and new tools to develop. Nevertheless—and

in spite of all those caveats—for the first time since the idea began gaining

serious attention several process nodes ago, dozens of companies have moved

beyond kicking the tires to developing what ultimately will be working

silicon.

“Cadence [], Mentor

Graphics [] and Ansys []are

aggressively developing tools to make 3D more predictable,” said Herb Reiter,

president of EDA2ASIC consulting. “This kind of information has to flow

through the materials to the Outsourced

Semiconductor Assembly and Test [KC]and foundry and then to the

customer.”

Critical pieces

under development include the second generation of high-bandwidth memory,

which SK Hynix is expected to begin sampling in the second quarter of 2015,

new and less costly interposer technologies and approaches, and new organic

substrates. There also are questions about whether Intel will allow its Embedded Multi-die

Interconnect Bridge(EMIB) to be widely licensed or sold outside of its own

foundry. Intel’s bridge technology allows for much tighter pitches than

organic substrates.

“What we don’t know

is how costly [EMIB] will be to integrate in order to make it flush with the

surface of the substrate or whether it’s something you can do with the die,”

said Reiter. “But there also is work underway for organic substrates to make

them smoother and almost the same pitch as silicon. And there is work being

done to put resistors, capacitors and inductors on the interposers, which

significantly increases the value proposition of the interposer.”

What’s changed?

Perhaps the biggest

shift, though, is in the attitude of companies working with 2.5D and 3D-ICs.

What started out as something of an interesting architectural approach to

shorten distances and widen signal plumbing is now becoming much more accepted

as a future direction for many chipmakers.

“The objection was

always cost and risk,” said Charlie Janac, chairman and CEO of Arteris [].

“The complaint was that interposer technology is expensive and dies don’t

necessarily work in a multi-chip package. But dealing with advanced nodes is

horrible on the analog side. Memory and logic already have diverged to

different process technologies, and it makes sense now to put them on separate

dies. It also changes the dynamics of what’s important in an SoC. It makes the

interconnect much more important and the packaging houses much more

important.”

That change in

attitude is widespread, even if the number of design starts is limited. Mike

Gianfagna, vice president of marketing at eSilicon [],

said the company is “actively engaged” in several 2.5D projects.

“There’s still a

lot of discussion about what’s the right interposer, whether it should be

silicon or another material, how big it should be,” he said. “This is all

about getting multiple chips with similar bandwidth on a chip. Not all of it

is silicon interposer technology, either. Some of it uses other strategies.”

Open-Silicon

likewise has seen limited uptake on 2.5D, even though there is plenty of

interest.

“You still have to

justify cost on a per customer basis,” said Steve Eplett, design technology

and automation manager at Open-Silicon []n.

“If you can leverage a die across multiple customers that changes the

economics. The metrics for power consumed between two die also aren’t as good

as homogeneous solutions, but we have gotten that down to a minimal and

reasonable tradeoff. And with new memories coming on, the power for

communication will be a tiny fraction of an off-chip solution. It’s

unterminated CMOS at 1.2 volts versus terminated, on-board DDR.”

What’s still missing?

Not all the pieces

are there yet, either. While chips are being built, some of the process isn’t

automated or as clear-cut as the move to finFETs or FD-SOI at 28nm.

“We’re not seeing a

whole lot of co-design optimization where you can measure the tradeoffs of one

chip versus another,” said Drew Wingard, CTO at Sonics. “We need to do mix and

match in a more aggressive form. When you put together a system at the PCB level

there are standard interfaces. At the board level, you can always wish a new

component existed, but most system designers look at what’s available. 2.5D is

a practical way of dealing with that.”

Those

standard interfaces—the electrical

interfaces for tying together different chips inside a single package—are

under discussion by standards groups.

“The pool of die

that you can integrate in a standard way is a black hole right now,” said

Open-Silicon’s Eplett.

Still,

most experts, standards groups and chipmakers see stacked die—both 2.5D and 3D ICs [KC]—as inevitable. While it makes sense for a company such as Intel to

continue pushing its very regular-shaped digital processor technology forward

for multiple more generations, the question is what else needs to go on that

die. If memory can be offloaded onto separate die, either with through-silicon

vias or interposers or bridges—or even bond wires—then distances will be

reduced, performance will increase, and the amount of power required to drive

signals will be cut significantly.

“There’s a lively

debate going on right now about 2.5D and 3D, said Chris Rowen, a Cadence []fellow.

“This is a natural outgrowth of what’s already been done. There are limits

about how many processes you can put on a die, and if you have digital logic,

DRAM and analog, you can’t make it work without moving everything closer

together. This is aggregation at the packaging level.”

The outlook remains

optimistic, but cautiously so. As Sawicki noted, the obvious application for

the first chips was in the data center, where development costs were less of a

factor and power was the key metric to worry about. “For a number of reasons, that

hasn’t occurred yet, but virtually everything that is required to make that

happen we have put in place.”

So will this all

change over the next couple years? All signs point to yes. Whether those

timetables will remain in place, though, remains to be seen.

Consider Packaging Requirements at the Beginning, Not the End, of the Design Cycle

By

eecatalog.com

7 min

Today’s integrated circuit designs are driven by size, performance,

cost, reliability, and time-to-market. In order to optimize these design

drivers, the requirements of the entire system should be considered at

the beginning of the design cycle—from the end system product down to

the chips and their packages. Failure to include packaging in this

holistic view can result in missing market windows or getting to market

with a product that is more costly and problematic to build than an

optimized product. In this article, we will provide some guidelines

covering eight issues where the packaging team should be closely

involved with the circuit design team.

Chip Design

As a starting consideration, chip packaging strategies should be developed prior to chip design completion. System timing budgets, power management, and thermal behavior can be defined at the beginning of the design cycle, eliminating the sometimes impossible constraints that are given to the package engineering team at the end of the design. In many instances chip designs end up being unnecessarily difficult to manufacture, have higher than necessary assembly costs and have reduced manufacturing yields because the chip design team used minimum design rules when looser rules could have been used.

Examples of these are using minimum pad-to-pad spacing when the pads could have been spread out or using unnecessary minimum metal to pad clearance (Figure 1). These hard taught lessons are well understood by the large chip manufacturers, yet often resurface with newer companies and design teams that have not experienced these lessons. Using design rule minimums puts unnecessary pressure on the manufacturing process resulting in lower overall manufacturing yields.

Packaging

Semiconductor packaging has often been seen as a necessary evil, with most chip designers relying on existing packages rather than package customization for optimal performance. Wafer level and chipscale packaging methods have further perpetuated the belief that the package is less important and can be eliminated, saving cost and improving performance. The real fact is that the semiconductor package provides six essential functions: power in, heat out, signal I/O, environmental protection, fan-out/compatibility to surface mounting (SMD), and managing reliability. These functions do not disappear with the implementation of chipscale packaging, they only transfer over to the printed circuit board (PCB) designer. Passing the buck does not solve the problem since the PCB designers and their tools are not usually expected to provide optimal consideration to the essential semiconductor die requirements.

Packages

Packaging technology has considerably evolved over the past 40 years. The evolution has kept pace with Moore’s Law increasing density while at the same time reducing cost and size. Hermetic pin grid arrays (PGAs) and side-brazed packages have mostly been replaced by the lead-frame-based plastic quad flat packs (QFP). Following those developments, laminate based ball grid arrays (BGA), quad flat pack no leads (QFN), chip scale and flip-chip direct attach became the dominate choice for packages.

The next generation of packages will employ through-silicon vias to allow 3D packaging with chip-on-chip or chip-on-interposer stacking. Such approaches promise to solve many of the packaging problems and usher in a new era. The reality is that each package type has its benefits and drawbacks and no package type ever seems to be completely extinct. The designer needs to have an in-depth understand of all of the packaging options to determine how each die design might benefit or suffer drawbacks from the use of any particular package type. If the designer does not have this expertise, it is wise to call in a packaging team that possesses this expertise.

Miniaturization

The push to put more and more electronics into a smaller space can inadvertently lead to unnecessary packaging complications. The ever increasing push to produce thinner packages is a compromise against reliability and manufacturability. Putting unpackaged die on the board definitely saves space and can produce thinner assemblies such as smart card applications. This chip-on-board (COB) approach often has problems since the die are difficult to bond because of their tight proximity to other components or have unnecessarily long bond wires or wires at acute angles that can cause shorts as PCB designers attempt to accommodate both board manufacturing line and space realities with wire bond requirements.

Additionally, the use of minimum PCB design rules can complicate the assembly process since the PCB etch-process variations must be accommodated. Picking the right PCB manufacturer is important too as laminate substrate manufacturers and standard PCB shops are most often seen as equals by many users. Often, designers will use material selections and metal systems that were designed for surface mounting but turn out to be difficult to wire bond. Picking a supplier that makes the right metallization tradeoffs and process disciplines is important in order to maximize manufacturing yields

Power

Power distribution, including decoupling capacitance and copper ground and power planes have been mostly a job for the PCB designer. This is a wonder to most users as to why decoupling is rarely embedded into the package as a complete unit. Cost or package size limitations are typically the reasons cited as to why this isn’t done. The reality is that semiconductor component suppliers usually don’t know the system requirements, power fluctuation tolerance and switching noise mitigation in any particular installation. Therefore power management is left to the system designer at the board level.

Thermal Management

Miniaturization results in less volume and heat spreading to dissipate heat. Often, there is no room or project funds available for heat sinks. Managing junction temperature has always been the job of the packaging engineer who must balance operating and ambient temperatures and packaging heat flow. Once again, it is important to develop a thermal strategy early in the design cycle that includes die specifics, die attachment material specification, heat spreading die attachment pad, thermal balls on BGA and direct thermal pad attachment during surface mount.

Signal Input/Output

Managing signal integrity has always been the primary concern of the packaging engineer. Minimizing parasitics, crosstalk, impedance mismatch, transmission line effects and signal attenuation are all challenges that must be addressed. The package must handle the input/output signal requirements at the desired operating frequencies without a significant decrease in signal integrity. All packages have signal characteristics specific to the materials and package designs.

Performance

There are a number of factors that impact performance including: on-chip drivers, impedance matching, crosstalk, power supply shielding, noise and PCB materials to name a few. The performance goals must be defined at the beginning of the design cycle and tradeoffs made throughout the design process.

Environmental Protection

The designer must also be aware that packaging choices have an impact on protecting the die from environmental contamination and/or damage. Next-generation chip-scale packaging (CSP) and flip chip technologies can expose the die to contamination. While the fab, packaging and manufacturing engineers are responsible for coming up with solutions that protect the die, the design engineer needs to understand the impact that these packaging technologies have on manufacturing yields and long-term reliability.

Involve your Packaging Team

Hopefully, these points have provided some insights on how packaging impacts many aspects of design and should not be relegated to just picking the right package at the end of the chip design. It is important that your packaging team be involved in the design process from initial specification through the final design review.

In today’s fast moving markets, market windows are shrinking so time to market is often the important differentiator between success and failure. Not involving your packaging team early in the design cycle can result in costly rework cycles at the end of the project, having manufacturing issues that delay the product introduction or, even worse, having impossible problems to solve that could have been eliminated had packaging been considered at the beginning of the design cycle.

System design incorporates many different design disciplines. Most designers are proficient in their domain specialty and not all domains. An important byproduct of these cross-functional teams is the spreading of design knowledge throughout the teams, resulting in more robust and cost effective designs.

John T. MacKay, President/Founder, Semi-Pac

John and his partner Tom Molinaro founded Semi-Pac in 1988. They both have been involved in the packaging industry since the late 70s. John has deep technical knowledge of the process and equipment used in integrated circuit fabrication and assembly.

Chip Design

As a starting consideration, chip packaging strategies should be developed prior to chip design completion. System timing budgets, power management, and thermal behavior can be defined at the beginning of the design cycle, eliminating the sometimes impossible constraints that are given to the package engineering team at the end of the design. In many instances chip designs end up being unnecessarily difficult to manufacture, have higher than necessary assembly costs and have reduced manufacturing yields because the chip design team used minimum design rules when looser rules could have been used.

Examples of these are using minimum pad-to-pad spacing when the pads could have been spread out or using unnecessary minimum metal to pad clearance (Figure 1). These hard taught lessons are well understood by the large chip manufacturers, yet often resurface with newer companies and design teams that have not experienced these lessons. Using design rule minimums puts unnecessary pressure on the manufacturing process resulting in lower overall manufacturing yields.

| Figure 1. In this image, the bonding pads are grouped in tight clusters rather than evenly distributed across the edge of the chip. This makes it harder to bond to the pads and requires more-precise equipment to do the bonding, thus unnecessarily increasing the assembly cost and potentially impacting device reliability. |

Semiconductor packaging has often been seen as a necessary evil, with most chip designers relying on existing packages rather than package customization for optimal performance. Wafer level and chipscale packaging methods have further perpetuated the belief that the package is less important and can be eliminated, saving cost and improving performance. The real fact is that the semiconductor package provides six essential functions: power in, heat out, signal I/O, environmental protection, fan-out/compatibility to surface mounting (SMD), and managing reliability. These functions do not disappear with the implementation of chipscale packaging, they only transfer over to the printed circuit board (PCB) designer. Passing the buck does not solve the problem since the PCB designers and their tools are not usually expected to provide optimal consideration to the essential semiconductor die requirements.

Packages

Packaging technology has considerably evolved over the past 40 years. The evolution has kept pace with Moore’s Law increasing density while at the same time reducing cost and size. Hermetic pin grid arrays (PGAs) and side-brazed packages have mostly been replaced by the lead-frame-based plastic quad flat packs (QFP). Following those developments, laminate based ball grid arrays (BGA), quad flat pack no leads (QFN), chip scale and flip-chip direct attach became the dominate choice for packages.

The next generation of packages will employ through-silicon vias to allow 3D packaging with chip-on-chip or chip-on-interposer stacking. Such approaches promise to solve many of the packaging problems and usher in a new era. The reality is that each package type has its benefits and drawbacks and no package type ever seems to be completely extinct. The designer needs to have an in-depth understand of all of the packaging options to determine how each die design might benefit or suffer drawbacks from the use of any particular package type. If the designer does not have this expertise, it is wise to call in a packaging team that possesses this expertise.

Miniaturization

The push to put more and more electronics into a smaller space can inadvertently lead to unnecessary packaging complications. The ever increasing push to produce thinner packages is a compromise against reliability and manufacturability. Putting unpackaged die on the board definitely saves space and can produce thinner assemblies such as smart card applications. This chip-on-board (COB) approach often has problems since the die are difficult to bond because of their tight proximity to other components or have unnecessarily long bond wires or wires at acute angles that can cause shorts as PCB designers attempt to accommodate both board manufacturing line and space realities with wire bond requirements.

Additionally, the use of minimum PCB design rules can complicate the assembly process since the PCB etch-process variations must be accommodated. Picking the right PCB manufacturer is important too as laminate substrate manufacturers and standard PCB shops are most often seen as equals by many users. Often, designers will use material selections and metal systems that were designed for surface mounting but turn out to be difficult to wire bond. Picking a supplier that makes the right metallization tradeoffs and process disciplines is important in order to maximize manufacturing yields

Power

Power distribution, including decoupling capacitance and copper ground and power planes have been mostly a job for the PCB designer. This is a wonder to most users as to why decoupling is rarely embedded into the package as a complete unit. Cost or package size limitations are typically the reasons cited as to why this isn’t done. The reality is that semiconductor component suppliers usually don’t know the system requirements, power fluctuation tolerance and switching noise mitigation in any particular installation. Therefore power management is left to the system designer at the board level.

Thermal Management

Miniaturization results in less volume and heat spreading to dissipate heat. Often, there is no room or project funds available for heat sinks. Managing junction temperature has always been the job of the packaging engineer who must balance operating and ambient temperatures and packaging heat flow. Once again, it is important to develop a thermal strategy early in the design cycle that includes die specifics, die attachment material specification, heat spreading die attachment pad, thermal balls on BGA and direct thermal pad attachment during surface mount.

Signal Input/Output

Managing signal integrity has always been the primary concern of the packaging engineer. Minimizing parasitics, crosstalk, impedance mismatch, transmission line effects and signal attenuation are all challenges that must be addressed. The package must handle the input/output signal requirements at the desired operating frequencies without a significant decrease in signal integrity. All packages have signal characteristics specific to the materials and package designs.

Performance

There are a number of factors that impact performance including: on-chip drivers, impedance matching, crosstalk, power supply shielding, noise and PCB materials to name a few. The performance goals must be defined at the beginning of the design cycle and tradeoffs made throughout the design process.

Environmental Protection

The designer must also be aware that packaging choices have an impact on protecting the die from environmental contamination and/or damage. Next-generation chip-scale packaging (CSP) and flip chip technologies can expose the die to contamination. While the fab, packaging and manufacturing engineers are responsible for coming up with solutions that protect the die, the design engineer needs to understand the impact that these packaging technologies have on manufacturing yields and long-term reliability.

Involve your Packaging Team

Hopefully, these points have provided some insights on how packaging impacts many aspects of design and should not be relegated to just picking the right package at the end of the chip design. It is important that your packaging team be involved in the design process from initial specification through the final design review.

In today’s fast moving markets, market windows are shrinking so time to market is often the important differentiator between success and failure. Not involving your packaging team early in the design cycle can result in costly rework cycles at the end of the project, having manufacturing issues that delay the product introduction or, even worse, having impossible problems to solve that could have been eliminated had packaging been considered at the beginning of the design cycle.

System design incorporates many different design disciplines. Most designers are proficient in their domain specialty and not all domains. An important byproduct of these cross-functional teams is the spreading of design knowledge throughout the teams, resulting in more robust and cost effective designs.

John T. MacKay, President/Founder, Semi-Pac

John and his partner Tom Molinaro founded Semi-Pac in 1988. They both have been involved in the packaging industry since the late 70s. John has deep technical knowledge of the process and equipment used in integrated circuit fabrication and assembly.

Manufacturing And Packaging Changes For 2015

Good times ahead for the semiconductor industry, but some tricky issues that they will have to navigate in 2015.

The predictions are segregated into four areas: Markets, Design, Semiconductors, and Tools and Flows. In this segment predictions related to semiconductors and packaging are explored.

2014 was a pivotal year for the semiconductor industry in that it was the year when the ecosystem recognized that Moore’s Law, while still on track technically, has become irrelevant to all but a few chipmakers. 28nm has become the node where most people are likely to design for a significant time and the industry will start to improve that node in ways that we have not seen in the past. New processes, materials and devices will be developed for 28nm and packaging solutions such as 2.5D and 3D will become as important as process development.

The industry itself is doing so well that it is heading for a squeeze. “We see approximately 5% to 7% growth in semiconductor business for 2015 and 12% in the pure-play foundry business,” says Graham Bell, vice president of marketing at Real Intent. “Leading pure-play foundry TSMC will grow faster than the industry average and in 2015 its business will surpass that of all other pure-play foundries combined.”

When we couple that with a prediction from The 2015 Foundry Almanac, there could be trouble ahead. “Wafer-fab utilization at the four largest pure-play IC foundries will increase to an estimated 92% in 2014 compared to 89% in 2013 and 88% in 2012.” That does not provide a lot of headroom for growth unless the fabs start putting in extra capacity.

Other parts of the industry are also getting squeezed. “The worldwide market for DRAMs will rise 16% to $54.1 billion next year (2015),” according to DRAMeXchange forecasts. The company predicts that smartphones and tablet computers will drive that growth. Mobile DRAMs represent 40% of that, up from about 36% in 2014.

Sticking around at 28nm Synopsys is convinced that 28nm is here to stay. Three different people within the company were ready to defend their conviction. “28nm will have a very, very long lifespan,” says Marco Casale-Rossi, product marketing manager within the Design Group of Synopsys. “This will be strengthened by FD-SOI, which may be a very compelling solution if supported by foundries.”

Rich Goldman, vice president of corporate marketing for Synopsys, provides a little more background reasoning. “As the focus is on cost, low power and integration rather than on processing power, the favored processes will be established process nodes at 28nm and above. As it is the last process node that does not require double patterning, 28nm will be a particularly long-lived node, becoming increasingly popular. This will play well for foundries offering specialty processes. FD-SoI will likely be applied to extend the capabilities and longevity of 28nm.”

These sentiments are echoes by Navraj Nandra, senior director of marketing for DesignWare analog/mixed signal Intellectual Property, embedded memories and logic libraries within Synopsys. “The 28nm node is the main-stay of many devices and predictions are that this will be a “long node” meaning tape-outs will continue until 2020. The trend here is that some SoC developers are staying on 28nm rather than moving to finFET technologies due to costs or re-tooling their EDA and IP flows. To address this market, the semiconductor foundries are offering an array of 28nm technologies, the two most interesting are TSMC 28HPC and 28 FD-SOI.”

Moore’s Law is still alive

None of this means that Moore’s Law is technically dead and for some markets may even be seeing some acceleration. “The high-risk, high-reward transitions to new nodes at 16/14nm will be moving very quickly to 10nm and then 7nm,” believes Chi-Ping Hsu, senior vice president, chief strategy officer for EDA and chief of staff to the CEO at Cadence.

According to Nandra, three factors are driving fabrication technologies today. “The requirements for increased functionality, lower power and smaller form factors in mobile consumer devices are driving the demand for smaller technologies nodes such as 14nm and 10nm. Using these technologies they benefit from the significant reduction in power consumption, both dynamic and leakage. Another key benefit has been performance since these technologies use FinFET devices that have much higher drive. All the major semiconductor fabrication suppliers are projecting volume production in 2015 for 16/14 FinFET.”